|

|

Downloads

Demos:

geexlab-demopack-mesh-shaders/gl/textured-quad/ geexlab-demopack-mesh-shaders/vk/textured-quad/ |

Rendering a textured quad with mesh shaders follows the same principle than for a RGB triangle:

– RGB Triangle with Mesh Shaders in OpenGL

– RGB Triangle with Mesh Shaders in Vulkan).

Ok there is an additional vertex and a texture, but it’s very very similar.

Here is the mesh shader, which is almost identical in OpenGL and Vulkan (in Vulkan there is an extra transformation: pos.y = -pos.y; pos.z = (pos.z + pos.w) / 2.0 for each vertex):

#version 450

#extension GL_NV_mesh_shader : require

layout(local_size_x = 1) in;

layout(triangles, max_vertices = 4, max_primitives = 2) out;

// Custom vertex output block

layout (location = 0) out PerVertexData

{

vec4 color;

vec4 uv;

} v_out[]; // [max_vertices]

float scale = 0.95;

const vec3 vertices[4] = {

vec3(-1,-1,0),

vec3(-1,1,0),

vec3(1,1,0),

vec3(1,-1,0)

};

const vec3 colors[4] = {

vec3(1.0,0.0,0.0),

vec3(0.0,1.0,0.0),

vec3(0.0,0.0,1.0),

vec3(1.0,0.0,1.0)

};

const vec2 uvs[4] = {

vec2(0.0,0.0),

vec2(0.0,1.0),

vec2(1.0,1.0),

vec2(1.0,0.0)

};

void main()

{

vec4 pos = vec4(vertices[0]*scale, 1.0);

// GL->VK conventions...

pos.y = -pos.y; pos.z = (pos.z + pos.w) / 2.0;

gl_MeshVerticesNV[0].gl_Position = pos;

pos = vec4(vertices[1] * scale, 1.0);

pos.y = -pos.y; pos.z = (pos.z + pos.w) / 2.0;

gl_MeshVerticesNV[1].gl_Position = pos;

pos = vec4(vertices[2] * scale, 1.0);

pos.y = -pos.y; pos.z = (pos.z + pos.w) / 2.0;

gl_MeshVerticesNV[2].gl_Position = pos;

pos = vec4(vertices[3] * scale, 1.0);

pos.y = -pos.y; pos.z = (pos.z + pos.w) / 2.0;

gl_MeshVerticesNV[3].gl_Position = pos;

v_out[0].color = vec4(colors[0], 1.0);

v_out[1].color = vec4(colors[1], 1.0);

v_out[2].color = vec4(colors[2], 1.0);

v_out[3].color = vec4(colors[3], 1.0);

v_out[0].uv = vec4(uvs[0], 0, 1);

v_out[1].uv = vec4(uvs[1], 0, 1);

v_out[2].uv = vec4(uvs[2], 0, 1);

v_out[3].uv = vec4(uvs[3], 0, 1);

gl_PrimitiveIndicesNV[0] = 0;

gl_PrimitiveIndicesNV[1] = 1;

gl_PrimitiveIndicesNV[2] = 2;

gl_PrimitiveIndicesNV[3] = 2;

gl_PrimitiveIndicesNV[4] = 3;

gl_PrimitiveIndicesNV[5] = 0;

gl_PrimitiveCountNV = 2;

}

And here is the pixel shader, for both APIs:

#version 450

layout (location = 0) in PerVertexData

{

vec4 color;

vec4 uv;

} fragIn;

layout (binding = 0) uniform sampler2D tex0;

layout (location = 0) out vec4 FragColor;

void main()

{

vec2 uv = fragIn.uv.xy;

uv.y *= -1.0;

vec4 t = texture(tex0, uv);

FragColor = fragIn.color * t;

}

In the Lua side, the code is nearly similar, the little difference is the texture: in OpenGL the texture has to be bound before rendering while in Vulkan the texture is a resource added to the descriptor set used by the pipeline.

The drawing code in the OpenGL demo:

gh_texture.bind(tex, 0)

gh_gpu_program.bind(nv_mesh_prog)

gh_renderer.draw_mesh_tasks(0, 1)

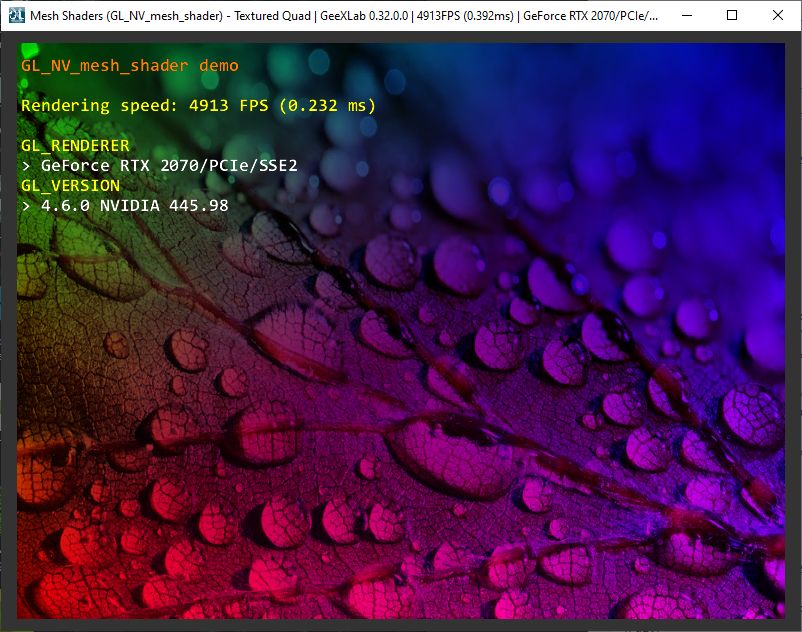

OpenGL demo:

The drawing code in the Vulkan demo:

gh_vk.descriptorset_bind(ds)

gh_vk.pipeline_bind(mesh_pipeline)

gh_vk.draw_mesh_tasks(0, 1)

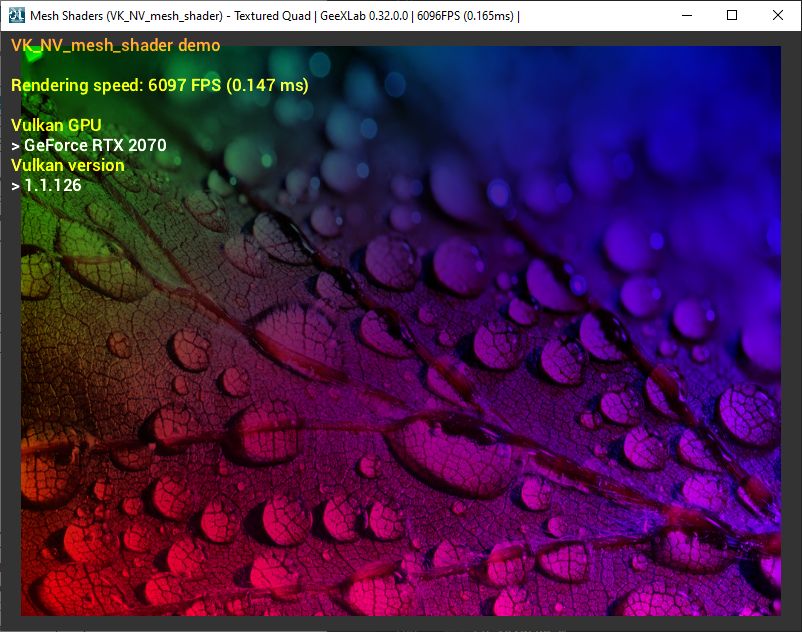

Vulkan demo: